Code

library(ggplot2)

iris |>

ggplot(aes(Sepal.Length, Sepal.Width, group = Species, colour = Species, shape = Species)) +

geom_point()

Jon Minton

December 30, 2023

This is part of a series of posts which introduce and discuss the implications of a general framework for thinking about statistical modelling. This framework is most clearly expressed in King, Tomz, and Wittenberg (2000).

In the last post in this series I’ve reintroduced standard linear regression and logistic regression as both being special versions of the same generalised model formula.

Stochastic Component

\[ Y_i \sim f(\theta_i, \alpha) \]

Systematic Component

\[ \theta_i = g(X_i, \beta) \]

With standard linear regression the link function \(g(.)\) is \(I(.)\), i.e. the identity function, meaning what goes in, is what comes out. By contrast for logistic regression \(g(.)\) is the logistic function, which squishes and squashes any real number as an input onto a value between 0 and 1 as an output.

Though it’s not always phrased this way, a motivating question behind the construction of most statistical models is, “What influence does a single input to the model, \(x_j\), have on the output, \(Y\)?”1 For a single variable \(x_j\) which is either present (1) or absent (0), this is in effect asking what is \(E(Y | x_j = 1) - E(Y | x_j = 0)\) ?2

Let’s look at a linear regression case, then a logistic regression case.

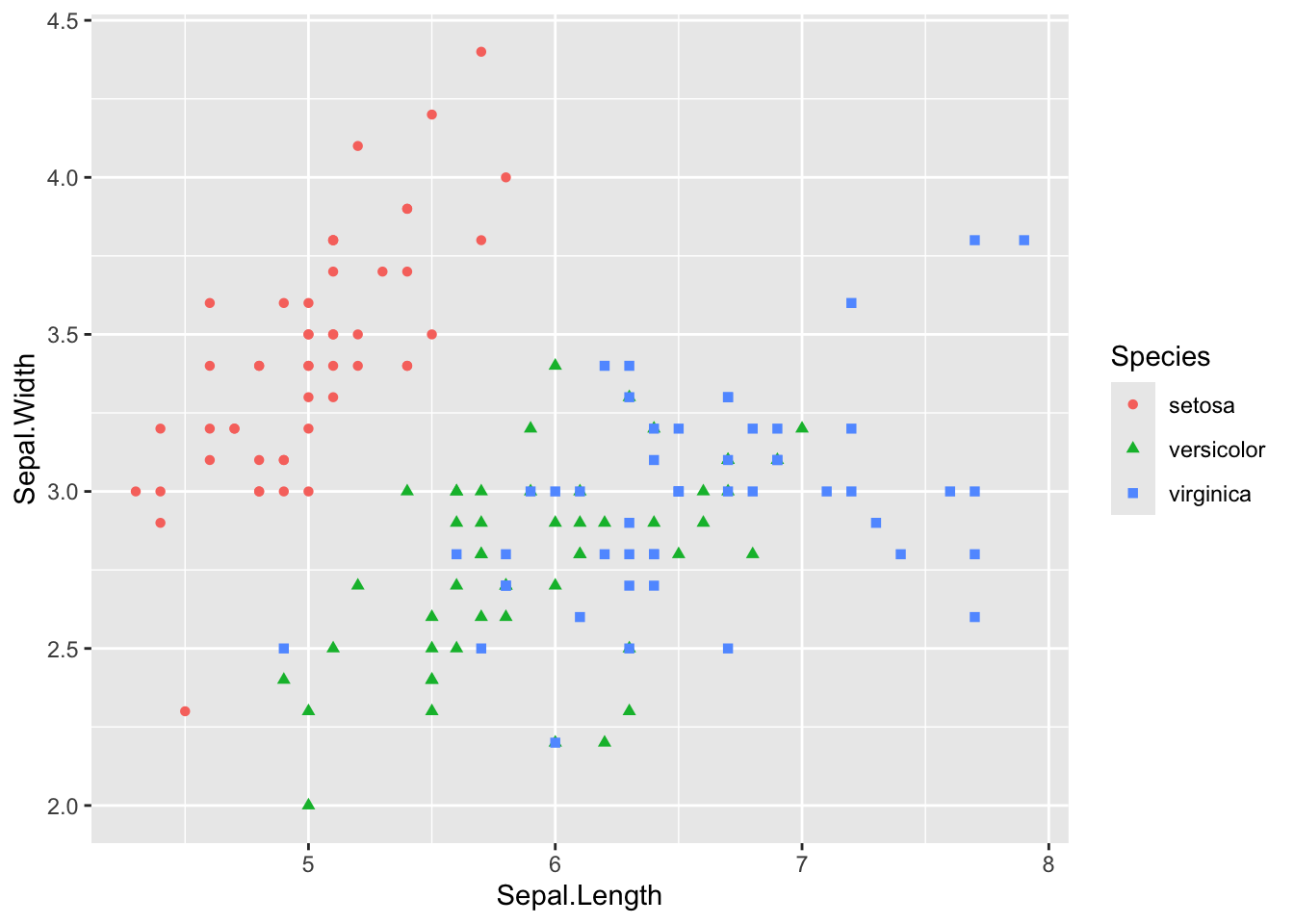

Using the iris dataset, let’s try to predict Sepal Width (a continuous variable) on Sepal Length (a continuous variable) and whether the species is setosa or not (a discrete variable). As a reminder, the data relating these three variables look as follows:

Let’s now build the model:

Call:

lm(formula = Sepal.Width ~ Sepal.Length + is_setosa, data = df)

Coefficients:

(Intercept) Sepal.Length is_setosaTRUE

0.7307 0.3420 0.9855 The coefficients \(\boldsymbol{\beta} = \{\beta_0, \beta_1, \beta_2\}\) are \(\{0.73, 0.34, 0.99\}\), and refer to the intercept, Sepal Length and is_setosa respectively.

If we assume a Sepal Length of 6, for example, then the expected Sepal Width (the thing we are predicting) is 0.73 + 6 * 0.34 + 0.99 or about 3.77 in the case where is_setosa is true, and 0.73 + 6 * 0.34 or about 2.78 where is_setosa is false.

The difference between these two values, 3.77 and 2.78, i.e. the ‘influence of setosa’ on the outcome, is 0.99, i.e. the \(\beta_2\) coefficient shown before. In fact, for any conceivable (and non-conceivable, i.e. negative) value of Sepal Length, the difference is still 0.99.

This is the \(\beta_2\) coefficient, and the reason why, for linear regression, and almost exclusively linear regression, looking at the coefficients themselves provides substantively meaningful information (something King, Tomz, and Wittenberg (2000) calls a ‘quantity of interest’) about the size of influence that a predictor has on a response.

Now let’s look at an example using logistic regression. We will use another tiresomely familiar dataset, mtcars. We are interested in estimating the effect that having a straight engine (vs=1) has on the probability of the car having a manual transmission (am=1). Our model also tries to control for the miles-per-gallon (mpg). The model specification is shown, the model is run, and the coefficeints are all shown below:

Call: glm(formula = am ~ mpg + vs, family = binomial(), data = mtcars)

Coefficients:

(Intercept) mpg vs

-9.9183 0.5359 -2.7957

Degrees of Freedom: 31 Total (i.e. Null); 29 Residual

Null Deviance: 43.23

Residual Deviance: 24.94 AIC: 30.94Here the coefficients \(\boldsymbol{\beta} = \{\beta_0, \beta_1, \beta_2\}\) are \(\{-9.92, 0.54, -2.80\}\), and refer to the intercept, mpg, and vs respectively.

But what does this actually mean, substantively?

A very common approach to trying to answer this question is to look at the statistical significance of the coefficients, which we can do with the summary() function

Call:

glm(formula = am ~ mpg + vs, family = binomial(), data = mtcars)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -9.9183 3.4942 -2.839 0.00453 **

mpg 0.5359 0.1967 2.724 0.00644 **

vs -2.7957 1.4723 -1.899 0.05758 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 43.230 on 31 degrees of freedom

Residual deviance: 24.944 on 29 degrees of freedom

AIC: 30.944

Number of Fisher Scoring iterations: 6A common practice in many social and health sciences is to offer something like a narrative summary of the above, something like:

Our logistic regression model indicates that manualness is positively and significantly associated with our measure of fuel efficiency (p < 0.01). There is also an indication of a negative association with straight engine, but this effect does not quite meet conventional thresholds for statistical significance (p < 0.10).

This above practice is known as ‘star-gazing’, because summary tables like those above tend to have one or more * symbols in the final row, if the value of the Pr(>|z|) is below 0.05, and narrative summaries like those just above tend to involve looking at the number of stars in each row, alongside whether the Estimate values have a minus sign in front of them.

Star gazing is a very common practice. It’s also a terrible practice, which - ironically - turns the final presented output of a quantitative model into the crudest of qualitative summaries (positive, negative; significant, not significant). Star gazing is what researchers tend to default to when presented with model outputs from the above because, unlike in the linear regression example, the extent to which the \(\beta\) coefficients answer substantive ‘how-much’-ness questions, like “How much does having a straight engine change the probability of manual transmission?, is not easily apparent from the coefficients themselves.

So, how can we do better?

One approach is to standardise the data that goes into the model before passing them to the model. Standardisation means attempting to make the distribution and range of different variables more similar, and is especially useful when comparing between different continuous variables.

To give an example of this, let’s look at a specification with weight (wt) and horsepower (hp) in place of mpg, but keeping engine-type indicator (vs):

Call:

glm(formula = am ~ vs + wt + hp, family = binomial(), data = mtcars)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 25.35510 11.24613 2.255 0.0242 *

vs -3.12906 2.92958 -1.068 0.2855

wt -9.64982 4.05528 -2.380 0.0173 *

hp 0.03242 0.01959 1.655 0.0979 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 43.2297 on 31 degrees of freedom

Residual deviance: 8.5012 on 28 degrees of freedom

AIC: 16.501

Number of Fisher Scoring iterations: 8Here both wt and hp are continuous variables.

A star gazing zombie might say something like

manualness is negatively and significantly associated with weight (p < 0.05); there is a positive association with horsepower but this does not meet standard thresholds of statistical significance (0.05 < p < 0.10).

A slightly better approach would be to standardise the variables wt and hp before passing to the model. Standardising means trying to set the variables to a common scale, and giving the variables more similar statistical characteristics.

Call:

glm(formula = am ~ vs + wt_z + hp_z, family = binomial(), data = mtcars_z)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -0.9348 1.4500 -0.645 0.5191

vs -3.1291 2.9296 -1.068 0.2855

wt_z -9.4419 3.9679 -2.380 0.0173 *

hp_z 2.2230 1.3431 1.655 0.0979 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 43.2297 on 31 degrees of freedom

Residual deviance: 8.5012 on 28 degrees of freedom

AIC: 16.501

Number of Fisher Scoring iterations: 8wt_z is the standardised version of wt, and hp_z is the standardised version of hp. By convention, whereas unstandardised coefficients are usually referred to as \(\beta\) (‘beta’) coefficients, standardised coefficients are instead referred to as \(b\) coefficients. But really, it’s the same model.

Note the p value of wt_z is the same as for wt, and the p value of hp_z is the same as that for hp. Note also the directions of effect are the same: the coefficients on wt and wt_z are both negative, and the coefficients of hp and hp_z are both positive.

This isn’t a coincidence. Of course standardising can’t really add any new information, can’t really change the relationship between a predictor and a response. It’s not really a new variable, it’s the same old variable, so the relationship between predictor and response that there used to be is still there now.

So why bother standardising?

One reason is it gives, subject to some assumptions and caveats, a way of gauging the relative importance of the two different continuous variables, by allowing a slightly more meaningful comparison between the two coefficients.

In this case, we have a standardised \(b\) coefficient of -9.44 for wt_z, and of 2.22 for hp_z. As with the unstandardised coefficients we can still assert that manualness is negatively associated with weight, and positively associated with horsepower. But now we can also compare the two numbers -9.44 and 2.22. The ratio of these two numbers is around 4.3. So, we might hazard to suggest something like:

a given increase in weight is around four times as important in negatively predicting manual transmission (i.e. in predicting an automatic transmission) as an equivalent increase in horsepower is in positively predicting manual transmission.

This isn’t a statement that’s easy to parse, but does at least allow slightly more information to be gleamed from the model. For example, it implies that, if a proposed change to a vehicle leads to similar relative (standardised) increases in both weight and horsepower then, as the weight effect is greater than the horsepower effect, the model will predict a decreased probability of manualness as a result.

But what about the motivating question, “What’s the effect of a straight engine (vs=1) on the probability of manual transmission (am=1)?”

The problem, unlike with the linear regression, is this is now a badly formulated question, based on an incorrect premise. The problem is with the word ‘the’, which implies there should be a single answer to this question, i.e. that the effect of vs on the probability of am=1 should always be the same. But, at least when it comes to absolute changes in the probability of am=1, this is no longer the case, as it depends on the values of the other variables in the model.

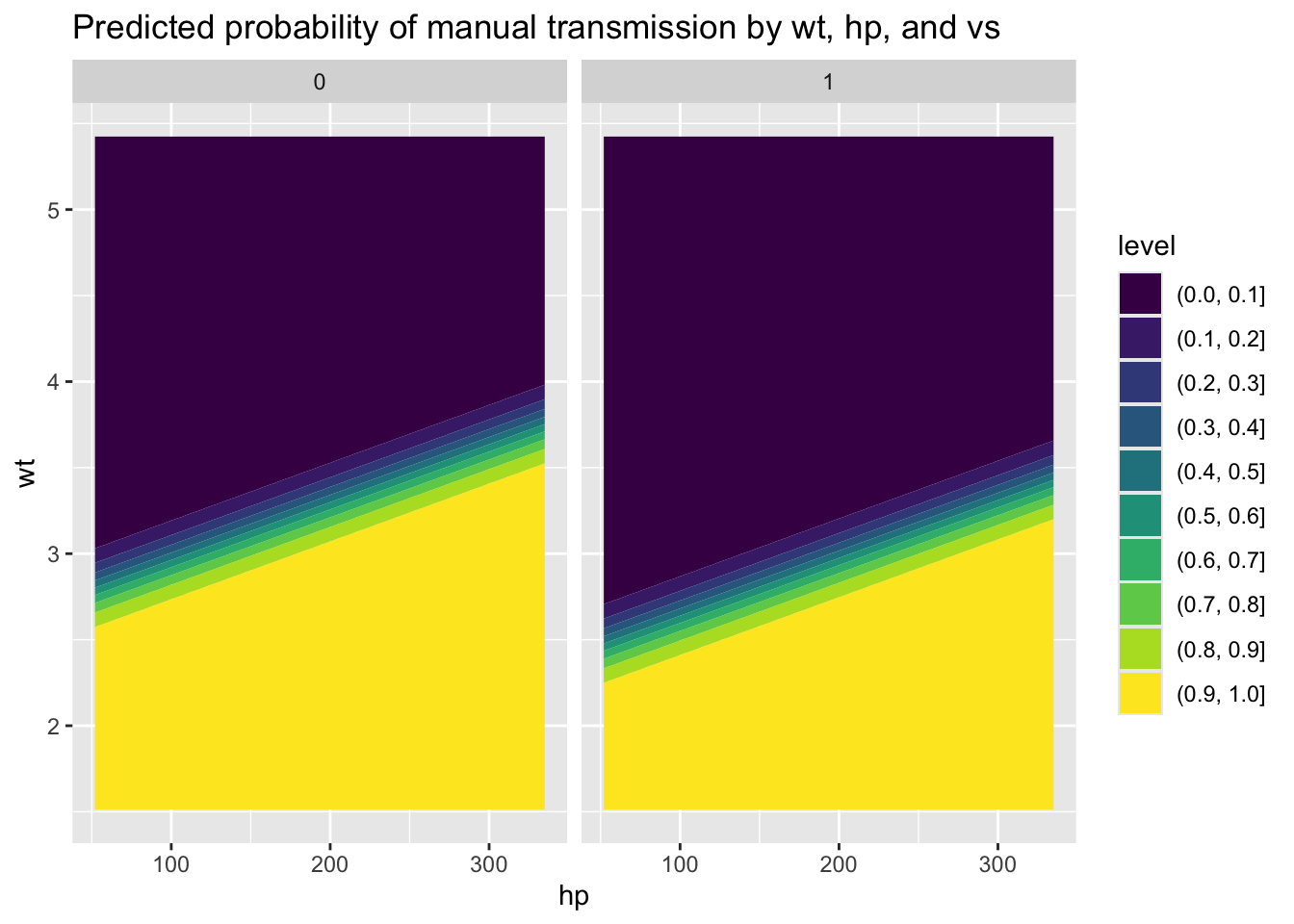

Instead of assuming vs=1 has a single effect on P(am=1), we instead need to think about predictions of the marginal effects of vs on am in the context of other plausible values of the other predictors in the model, wt and hp. This involves asking the model a series of well formulated and specific questions.

Before we do that, however, there’s a useful heuristic that can be employed when looking at discrete variables and using a logistic regression specification. The heuristic, which is based on the properties of the logistic function,3 is called divide-by-four. What this means is that, if we take the coefficient on vs of -3.13, and divide this value by four, we get a value of -0.78. Notice that the absolute value of -0.78 is between 0 and 1.4 What this value gives is the maximum possible effect that the discrete variable (the presence rather than absence of a straight engine) has on the probability of being a manual transmission. We can say, “a straight engine reduces the probability of a manual transmission by up to 78%”

But, as mentioned, this doesn’t quite answer the motivating question, it gives an upper bound to the answer, not the answer itself.5 We can instead start to get a sense of ‘the’ effect of the variable vs on P(am=1) by asking the model a series of questions.

We can start by getting the range of observed values for the two continuous variables, hp and mpg:

[1] 52[1] 335[1] 1.513[1] 5.424We can then ask the model to make predictions of \(P(am=1)\) for a large number of values of hp and wt within the observed range, both in the condition in which vs=0 and in the condition in which vs=1. The expand_grid function6 can help us do this:

For each of these permutations of inputs, we can use the model to get a conditional prediction. For convenience, we can also attach this as an additional column to the predictor data frame:

predictions_predictors_straight <- predictors_straight |>

mutate(

p_manual = predict(mod_logistic_2, type = "response", newdata = predictors_straight)

)

predictions_predictors_vshaped <- predictors_vshaped |>

mutate(

p_manual = predict(mod_logistic_2, type = "response", newdata = predictors_vshaped)

)We can see how the predictions vary over hp and wt using a heat map or contour map:

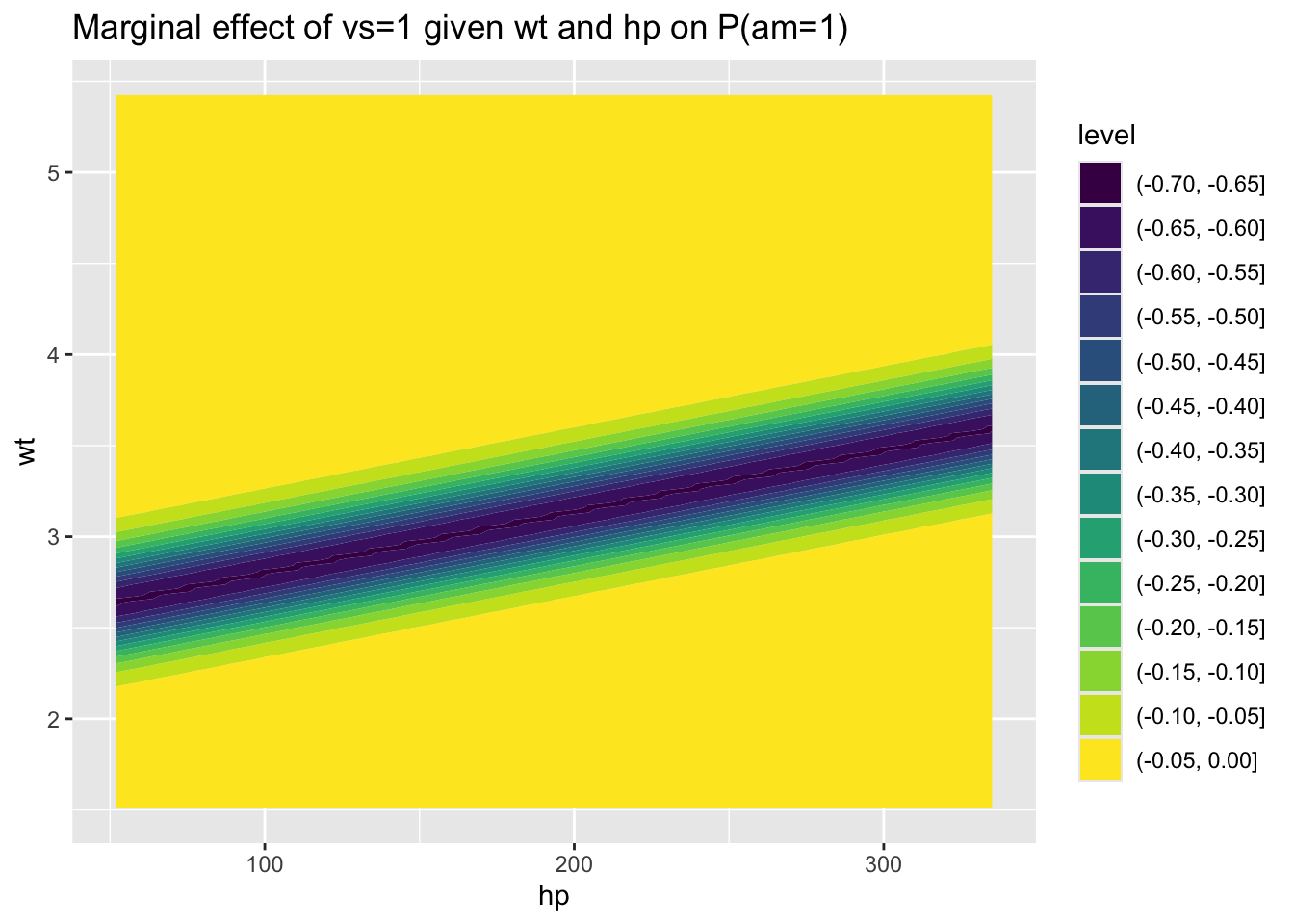

We can also produce a contour map of the differences between these two contour maps, i.e. the effect of a straight (vs=1) compared with v-shaped (vs=0) engine, which gets us a bit closer to the answer:

predictions_predictors_straight |>

bind_rows(

predictions_predictors_vshaped

) |>

group_by(hp, wt) |>

summarise(

diff_p_manual = p_manual[vs==1] - p_manual[vs==0]

) |>

ungroup() |>

ggplot(

aes(x = hp, y = wt, z = diff_p_manual)

) +

geom_contour_filled() +

labs(

title = "Marginal effect of vs=1 given wt and hp on P(am=1)"

)

We can see here that, for large ranges of wt and hp, the marginal effect of vs=1 is small. However, for particular combinations of hp and wt, such as where hp is around 200 and wt is slightly below 3, then the marginal effect of vs=1 becomes large, up to around a -70% reduction in the probability of manual transmission. (i.e. similar to the theoretical maximum marginal effect of around -78%).

So, what’s the effect of vs=1 on P(am=1)? i.e. how should we boil down all these 10,000 predicted effect sizes into a single effect size?

I guess, if we have to try to answer this silly question, then we could take the average effect size…

# A tibble: 1 × 1

mean_diff_p_manual

<dbl>

1 -0.0821So, we get an average difference of around -0.08, i.e. about an 8% reduction in probability of manual transmission.

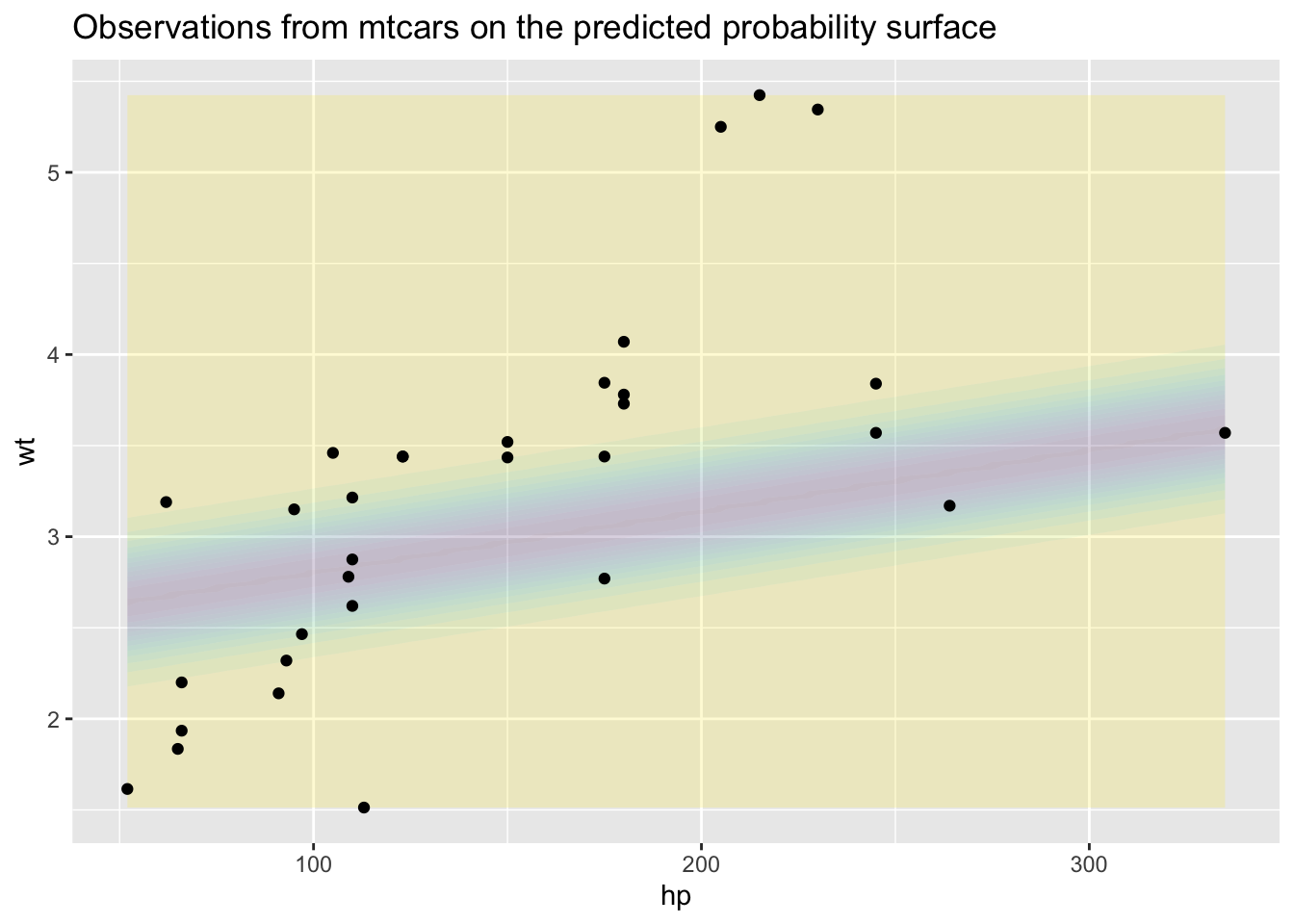

Is this a reasonable answer? Probably not, because although the permutations of wt and hp we looked at come from the observed range, most of these combinations are likely very ‘theoretical’. We can get a sense of this by plotting the observed values of wt and hp onto the above contour map:

predictions_predictors_straight |>

bind_rows(

predictions_predictors_vshaped

) |>

group_by(hp, wt) |>

summarise(

diff_p_manual = p_manual[vs==1] - p_manual[vs==0]

) |>

ungroup() |>

ggplot(

aes(x = hp, y = wt, z = diff_p_manual)

) +

geom_contour_filled(alpha = 0.2, show.legend = FALSE) +

labs(

title = "Observations from mtcars on the predicted probability surface"

) +

geom_point(

aes(x = hp, y = wt), inherit.aes = FALSE,

data = mtcars

)

Perhaps a better option, then, would be to calculate an average marginal effect using the observed values, but switching the observations for vs to 1 in one scenario, and 0 in another scenario:

predictions_predictors_observed_straight <- mtcars |>

select(hp, wt) |>

mutate(vs = 1)

predictions_predictors_observed_straight <- predictions_predictors_observed_straight |>

mutate(

p_manual = predict(mod_logistic_2, type = "response", newdata = predictions_predictors_observed_straight)

)

predictions_predictors_observed_vshaped <- mtcars |>

select(hp, wt) |>

mutate(vs = 0)

predictions_predictors_observed_vshaped <- predictions_predictors_observed_vshaped |>

mutate(

p_manual = predict(mod_logistic_2, type = "response", newdata = predictions_predictors_observed_vshaped)

)

predictions_predictors_observed <-

bind_rows(

predictions_predictors_observed_straight,

predictions_predictors_observed_vshaped

)

predictions_marginal <-

predictions_predictors_observed |>

group_by(hp, wt) |>

summarise(

diff_p_manual = p_manual[vs==1] - p_manual[vs==0]

)

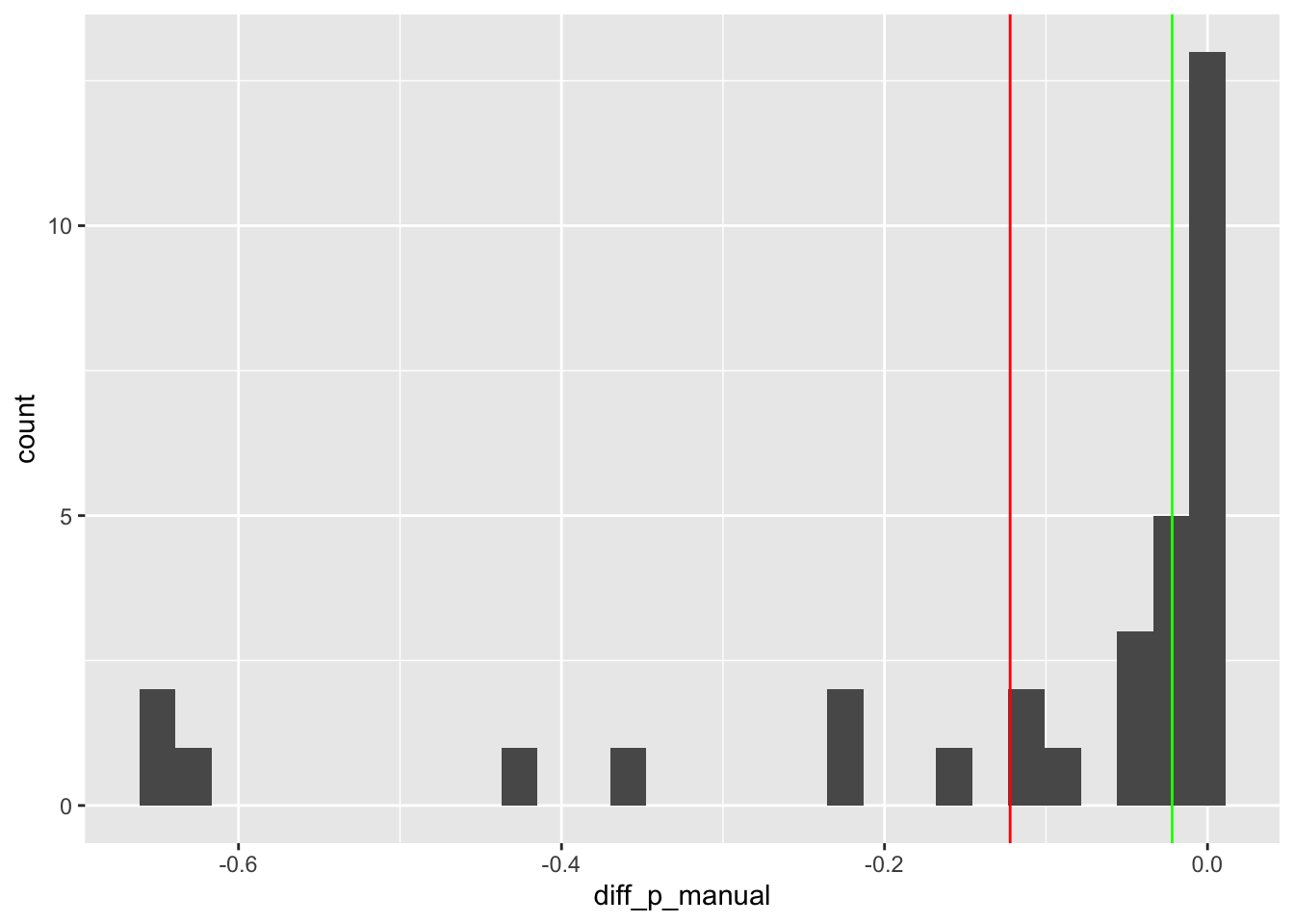

predictions_marginal |>

ggplot(aes(x = diff_p_manual)) +

geom_histogram() +

geom_vline(aes(xintercept = mean(diff_p_manual)), colour = "red") +

geom_vline(aes(xintercept = median(diff_p_manual)), colour = "green")

In the above the red line indicates the mean value of these marginal differences, which is -0.12, and the green line the median value of these differences, which is around -0.02. So, even with just these two measures of central tendency, there’s around a six-fold difference in the estimate of ‘the effect’. We can also see there’s a lot of variation, from around nothing (right hand side), to around a 65% reduction (left hand side).

If forced to give a simple answer (to this overly simplistic question), we might plump for the mean for theoretical reasons, and say something like “The effect of a straight engine is to reduce the probability of a manual transmission by around an eighth”. But I’m sure, having seen how much variation there is in these marginal effects, we can agree this ‘around an eighth’ answer, or any single number answer, is likely to be overly reductive.

Hopefully, however, it is more informative than ‘statistically significant and negative’, (the stargazing approach) or ‘up to around 78%’ (the divide-by-four approach).7

Linear regression tends to give a false impression about how straightforward it is to use a model to answer questions of the form “What is the effect of x on y?”. This is because, for linear regression, but few other model specifications, the answer to this question is in the \(\beta\) coefficients themselves. For other model specifications, like the logistic regression example above, the correct-but-uninformative answer tends to be “it depends”, and potentially more informative answers tend to require a bit more work to derive and interpret.

This post concludes the first section of this blog series. We showed the importance of producing predictions from models, rather than just staring at tables of coefficients and producing qualitative ‘stargazing’ summaries of their statistical significance and direction of effect. Statistical significance of individual coefficients almost never answers questions of substantive significance, which instead come from model predictions.

However in the predictions so far, we’ve accidentially pretended to know more than we do. For each prediction, despite imperfect knowledge, we’ve presented point estimates, a single prediction, implying our estimates are sometimes perfectly precise. To be more honest to the user, we should instead present a range of estimates that takes into account all the sources of uncertainty in our modelling which lead to uncertainty in our predictions.

Section two of this blog series, starting with part five, takes us through the material necessary to go from presenting the kind of dishonest certainty of point estimates in predictions, to honest uncertainty in predictive intervals. This involves covering a lot of theoretical and methodological territory, and is fairly challenging. However we do this in order to make it easier for the end user of statistical models, decision makers, to get the kind of information they need and value most from models.

Note here I’m using \(x_j\), not \(x_i\), and that \(X\beta\) is shorthand for \(\beta_0 + \beta_1 x_1 + \beta_2 x_2 + \beta_3 x_3\) and so on. In using the \(j\) suffix, I’m referring to just one of the specific \(x\) values, \(x_1\), \(x_2\), \(x_3\), which is equivalent to selecting one of the columns in \(X\). By contrast \(i\) should be considered shorthand for selection of one of the rows of \(X\), i.e. one of the series of observations that goes into the dataset \(D\).↩︎

\(E(.)\) is the expectation operator, and \(|\) indicates a condition. So, the two terms mean, respectively, what is the expected value of the outcome if the variable of interest is ‘switched on’?, and what is the expected value of the outcome if the variable of interest is ‘switched off’?↩︎

The logistic function maps any real number z onto the value range 0 to 1. z is \(X\beta\), which in non-matrix notation is equivalent to a sum of products \(\sum_{k=0}^{K}x_k\beta_k\) (where, usually, \(x_0\) is 1, i.e. the intercept term). Another way of expressing this would be something like \(\sum_{k \in S}x_k\beta_k\) where by default \(S = \{0, 1, 2, ..., K\}\). We can instead imagine partitioning out \(S = \{S^{-J}, S^{J}\}\) where the superscript \(J\) indicates the Jth variable, and \(-J\) indicates everything in \(S\) apart from the Jth variable. Where J is a discrete variable, the effect of J on \(P(Y=1)\) is \(logistic({\sum_{k \in S^{-J}}x_k\beta_k + \beta_J}) - logistic({\sum_{k \in S^{-J}}x_k\beta_k})\), where \(logistic(z) = \frac{1}{1 + e^{-z}}\). The marginal effect of the \(\beta_J\) coefficient thus depends on the other term \(\sum_{k \in S^{-J}}x_k\beta_k\). Where this other term is set to 0 the marginal effect of \(\beta_J\) becomes \(logistic(\beta_J) - logistic(0)\). According to p.82 of this chapter by Gelman we can equivalently ask the question ‘what is the first derivative of the logistic regression with respect to \(\beta\)?’. Asking more about this to Wolfram Alpha we get this page of information, and scrolling down to the section on the global minimum we indeed get an absolute value of \(\frac{1}{4}\), so the maximum change in \(P(Y=1)\) given a unit change in \(\beta\) is indeed one quarter of the value of \(\beta\), hence why the ‘divide-by-four’ heuristic ‘works’. This isn’t quite a full derivation, but more explanation than I was planning for a footnote! In general, it’s better just to remember ‘divide-by-four’ than go down the rabbit warren of derivation each time! (As I’ve just learned, to my cost, writing this footnote!)↩︎

We should always expect the absolute value of a coefficient for a discrete variable to be less than four, for this reason.↩︎

The lower bound for the marginal effect of a discrete variable, or any variable, is zero. This is when the absolute value of the sum of the product of the other variables is infinite.↩︎

Or the base R expand.grid function↩︎

Note from Claude: The marginal effects approach demonstrated here connects directly to modern machine learning interpretability methods. SHAP (SHapley Additive exPlanations) values and partial dependence plots serve similar purposes—quantifying feature importance beyond simple coefficients. In Python, the marginaleffects package (ported from R) provides these calculations, while scikit-learn’s PartialDependenceDisplay and the SHAP library (shap.Explainer) offer ML-focused alternatives. Coursera’s “Machine Learning Specialization” by Andrew Ng and Molnar (2022) both cover how traditional statistical inference concepts like marginal effects extend to complex models.↩︎